Titled “A 510μW 0.738-mm2 6.2-pJ/SOP Online Learning Multi-Topology SNN Processor with Unified Computation Engine in 40-nm CMOS”, this contribution has been published by IEEE Transactions on Biomedical Circuits and Systems. In this paper, we built a reconfigurable online learning neuromorphic processor named RAINE to achieve high energy efficiency and flexibility simultaneously.

Congratulations to Chaoming Fang and other CenBRAIN Neurotechmembers for this excellent achievement!

Citation

C. Fang, C. Wang, S. Zhao, F. Tian, J. Yang and M. Sawan, "A 510μW 0.738-mm26.2-pJ/SOP Online Learning Multi-Topology SNN Processor with Unified Computation Engine in 40-nm CMOS," in IEEE Transactions on Biomedical Circuits and Systems, doi: 10.1109/TBCAS.2023.3279367.

More information can be found in this link:

https://europepmc.org/article/med/37224372

Abstract

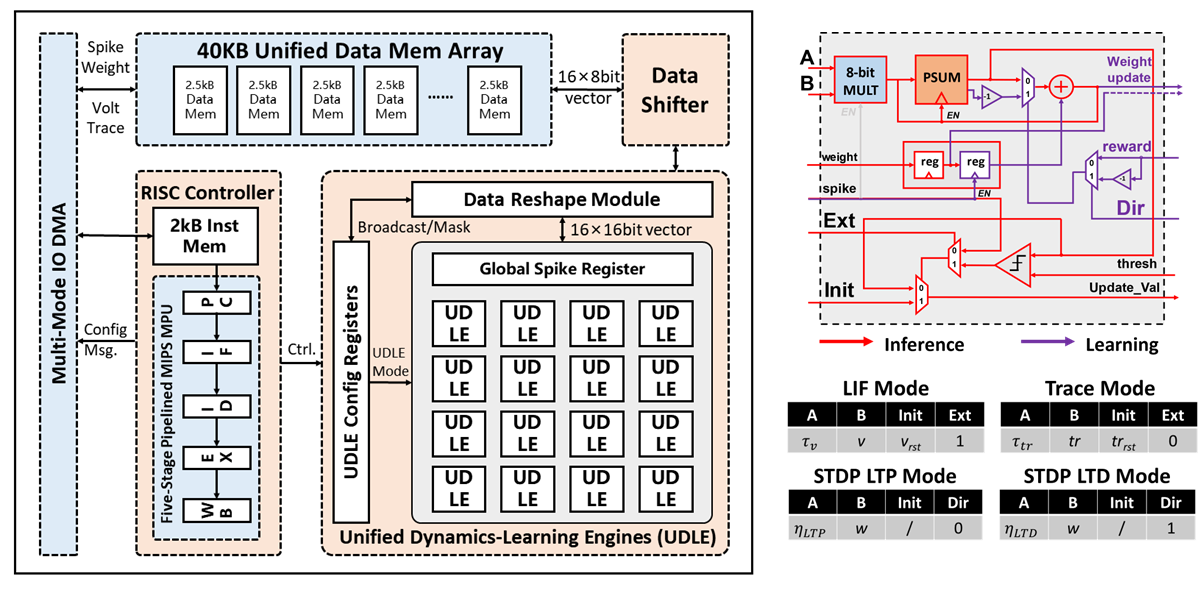

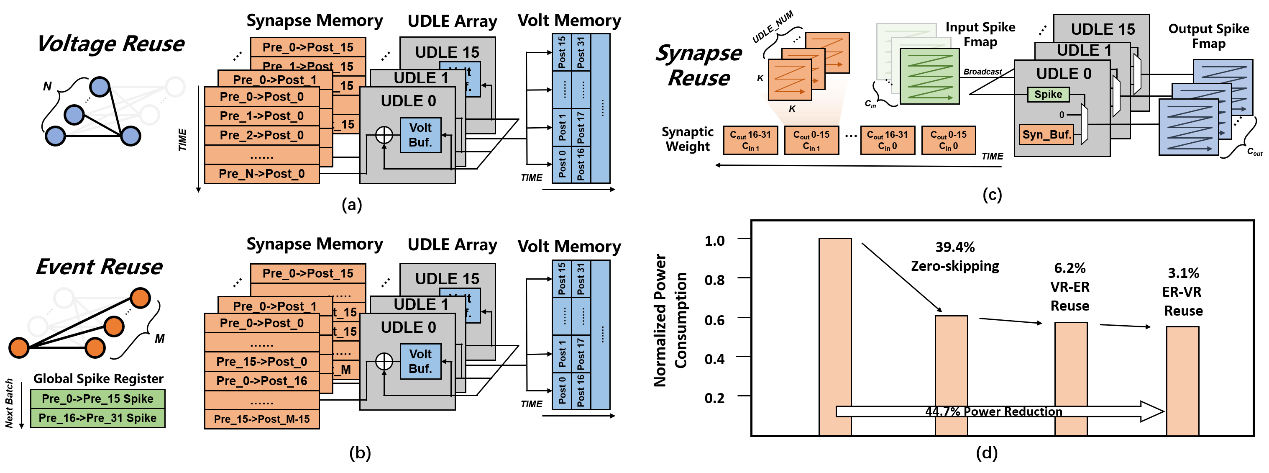

Implementing neural networks (NN) on edge devices enables AI to be applied in many daily scenarios. The stringent area and power budget on edge devices impose challenges on conventional NNs with massive energy-consuming Multiply Accu- mulation (MAC) operations and offer an opportunity for Spiking Neural Networks (SNN), which can be implemented within sub- mW power budget. However, mainstream SNN topologies varies from Spiking Feedforward Neural Network (SFNN), Spiking Recurrent Neural Network (SRNN), to Spiking Convolutional Neural Network (SCNN), and it is challenging for the edge SNN processor to adapt to different topologies. Besides, online learning ability is critical for edge devices to adapt to local environments but comes with dedicated learning modules, further increasing area and power consumption burdens. To alleviate these prob- lems, this work proposed RAINE, a reconfigurable neuromorphic engine supporting multiple SNN topologies and a dedicated trace- based rewarded spike-timing-dependent plasticity (TR-STDP) learning algorithm. Sixteen Unified-Dynamics Learning-Engines (UDLEs) are implemented in RAINE to realize a compact and reconfigurable implementation of different SNN operations. Three topology-aware data reuse strategies are proposed and analyzed to optimize the mapping of different SNNs on RAINE. A 40-nm prototype chip is fabricated, achieving energy-per- synaptic-operation (SOP) of 6.2 pJ/SOP at 0.51V, and power consumption of 510 μW at 0.45V. Finally, three examples with dif- ferent SNN topologies, including SRNN-based ECG arrhythmia detection, SCNN-based 2D image classification, and end-to-end on-chip learning for MNIST digit recognition, are demonstrated on RAINE with ultra-low energy consumption of 97.7nJ/step, 6.28μJ/sample, and 42.98μJ/sample respectively. These results show the feasibility of obtaining high reconfigurability and low power consumption simultaneously on a SNN processor.

Fig. 1: The design of the neural dynamics computing engine integrating the overall architecture of the chip and reasoning training.

Fig. 2: Schematic diagram of topology-aware hybrid data multiplexing strategy and energy consumption optimization effect.