September 29 to October 4, 2024, the 18th European Conference on Computer Vision (ECCV) was held in Milan, Italy. As a famous conference in the field of computer vision, ECCV, together with CVPR and ICCV, is known as the “three top conferences” in this field. ECCV is held every two years and covers all subfields of computer vision, including but not limited to image recognition, object detection, scene understanding, visual tracking, 3D reconstruction, embodied intelligence, and deep learning applications in vision.

/ Milan convention center for ECCV 2024/

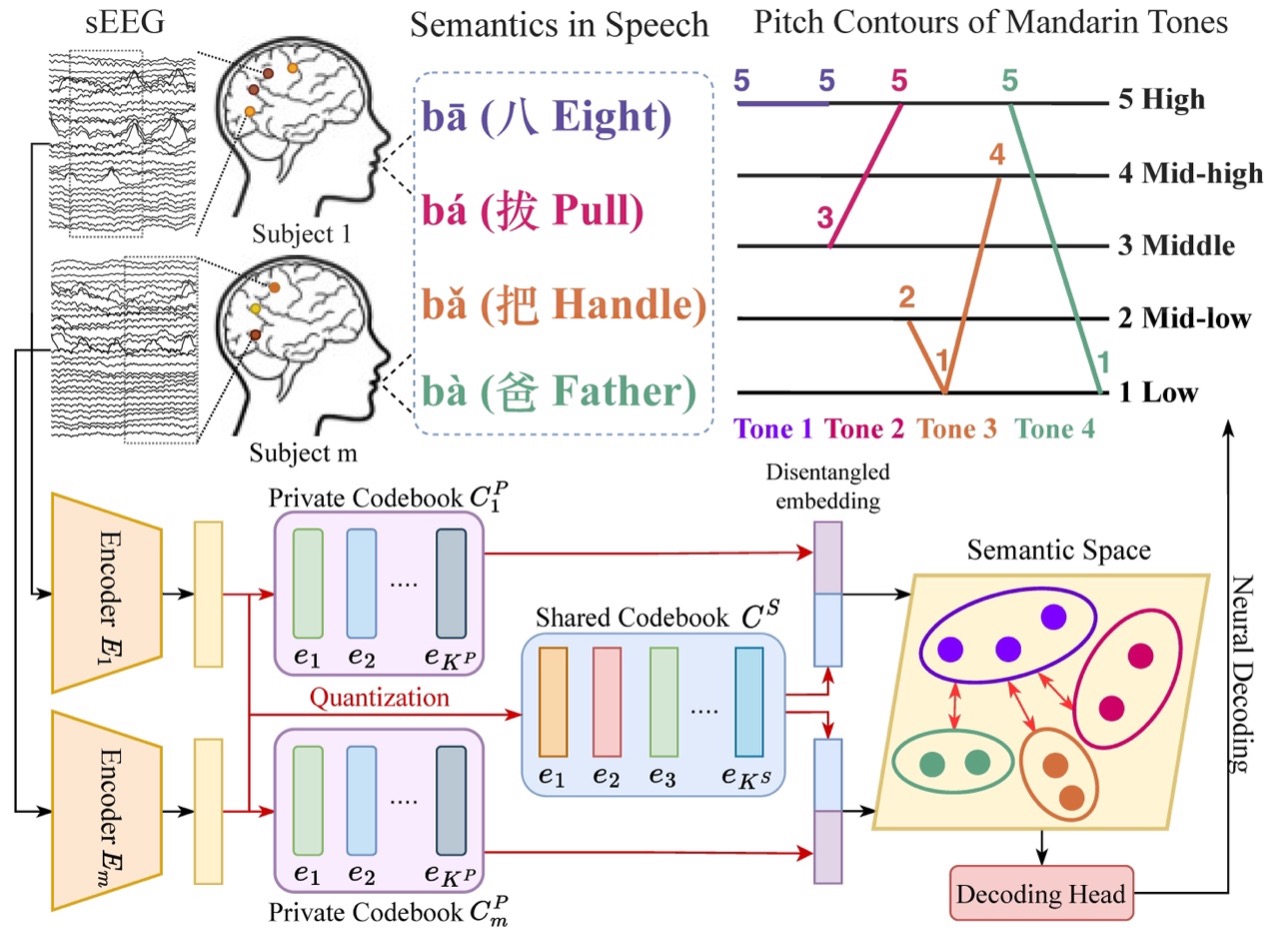

The paper of Yankun Xu, a Ph.D. graduate from Prof. Mohamad Sawan's group, was accepted by ECCV 2024.

/Yankun Xu and his accepted paper poster/

“VSViG: Real-time Video-based Seizure Detection via Skeleton-based Spatiotemporal ViG”

Xu, Y., Wang, J., Chen, Y. H., Yang, J., Ming, W., Wang, S., & Sawan, M.,VSViG: Real-time Video-based Seizure Detection via Skeleton-based Spatiotemporal ViG, 18th European Conference on Computer Vision (ECCV), 2024

Abstract

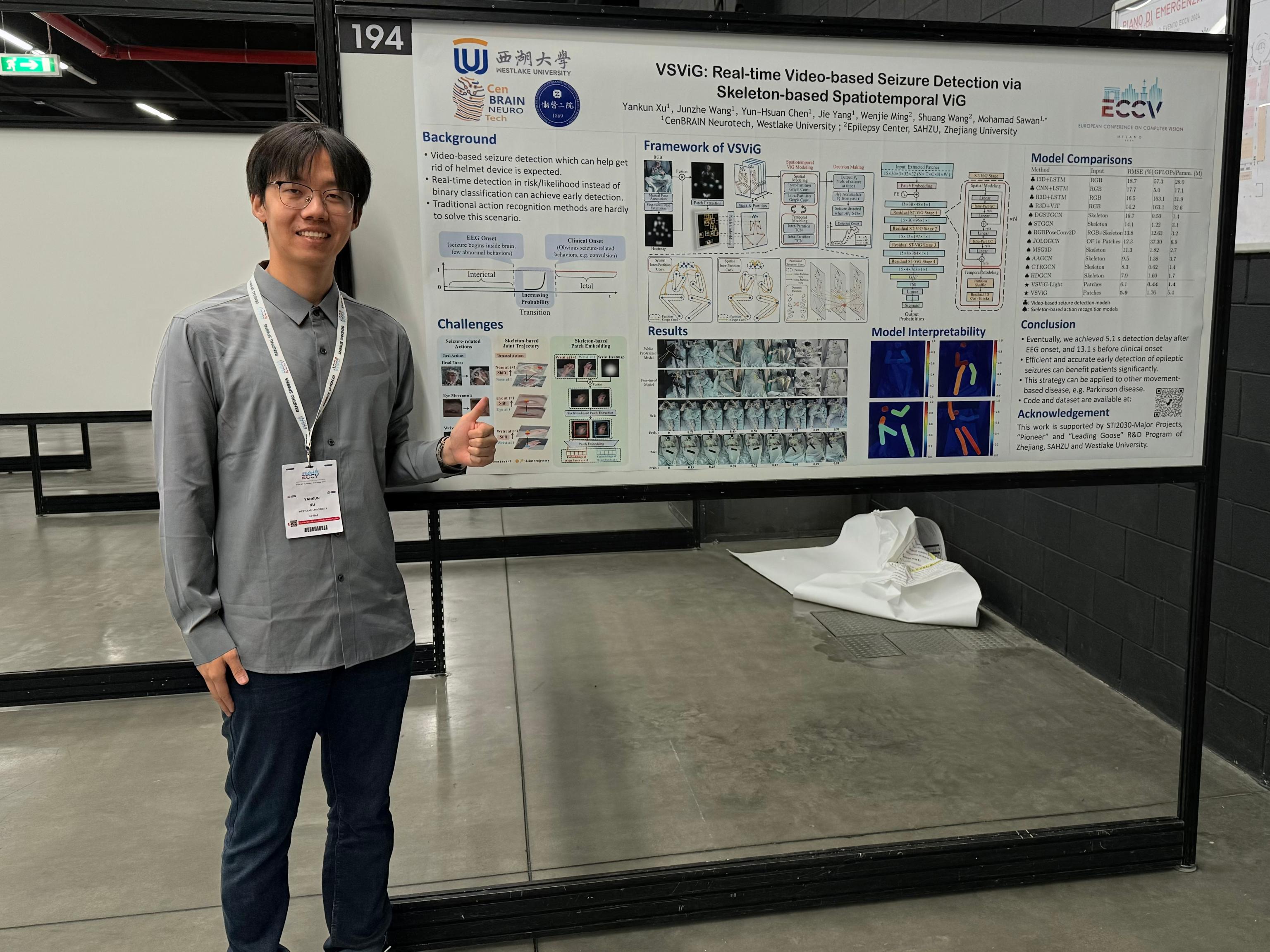

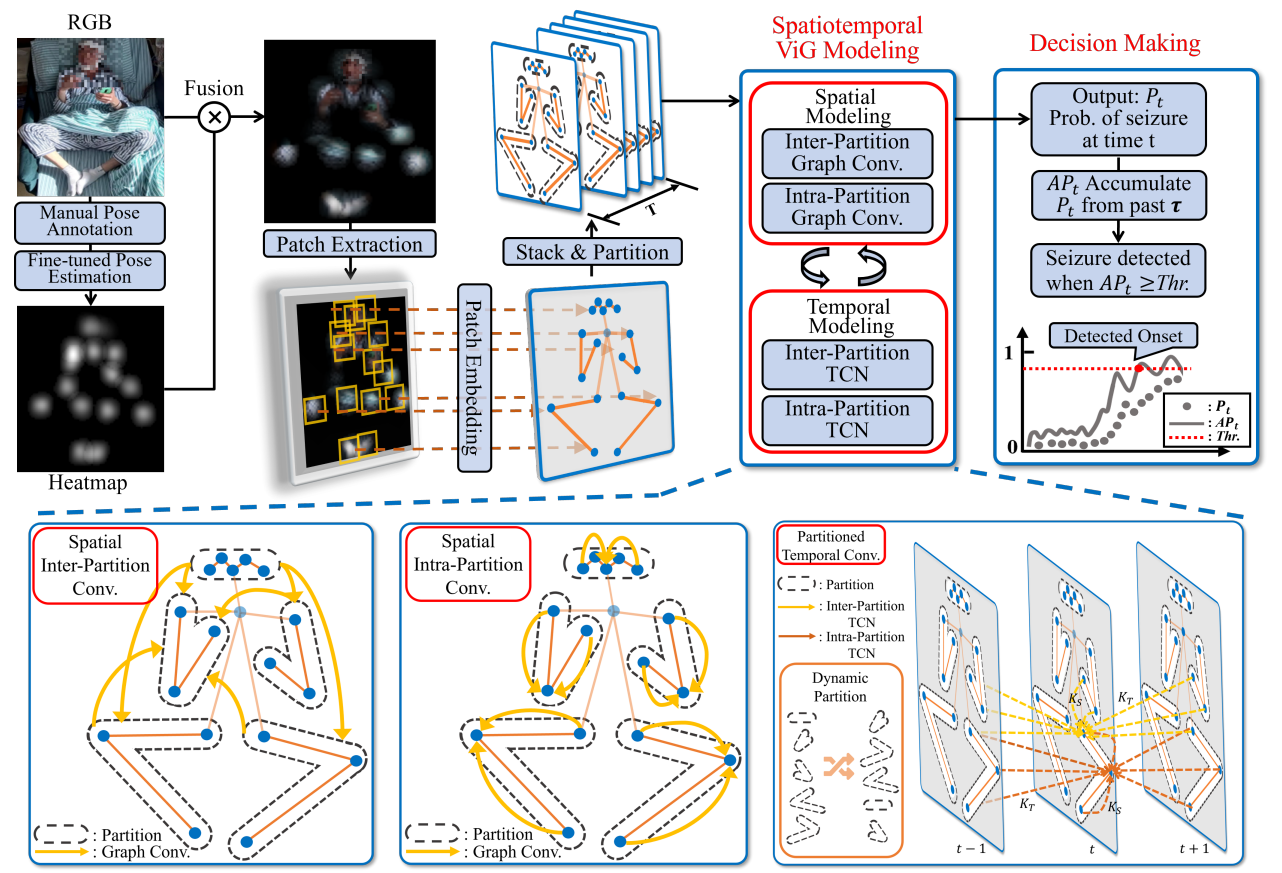

An accurate and efficient epileptic seizure onset detection can significantly benefit patients. Traditional diagnostic methods, primarily relying on electroencephalograms (EEGs), often result in cumbersome and non-portable solutions, making continuous patient monitoring challenging. The video-based seizure detection system is expected to free patients from the constraints of scalp or implanted EEG devices and enable remote monitoring in residential settings. Previous video-based methods neither enable all-day monitoring nor provide short detection latency due to insufficient resources and ineffective patient action recognition techniques. Additionally, skeleton-based action recognition approaches remain limitations in identifying subtle seizure-related actions. To address these challenges, we propose a novel Video-based Seizure detection model via a skeleton-based spatiotemporal Vision Graph neural network (VSViG) for its efficient, accurate and timely purpose in real-time scenarios. Our experimental results indicate VSViG outperforms previous state-of-the-art action recognition models on our collected patients’ video data with higher accuracy (5.9% error), lower FLOPs (0.4G), and smaller model size (1.4M). Furthermore, by integrating a decision-making rule that combines output probabilities and an accumulative function, we achieve a 5.1 s detection latency after EEG onset, a 13.1 s detection advance before clinical onset, and a zero false detection rate. The project homepage is available at:https://github.com/xuyankun/VSViG/

Fig.1. Proposed VSViG framework

Fig.2. Predictive likelihoods/probabilities of video clips from two seizures.

The road to academia is long, and we are seeking for it. Being submitted on ECCV, one of the top three computer vision conferences in the world, is undoubtedly a great recognition of Yankun's hardworking and unremitting pursuit during his Ph.D. study. Yankun is especially grateful to his CenBRAIN Neurotech and his supervisor, Prof. Mohamad Sawan, for supporting him to represent our Center to attend and present the poster at this conference in Milan. He said, “The several days in Milan gave me a precious opportunity to communicate with many industry leaders, research seniors and students. Here, I witnessed many advanced contributions, and deeply experienced the rapid development of cutting-edge technology in the field of computer vision, which helped me to think more about the future direction of my research.”

/Duomo under sunset & Florence Overlook, photo by Yankun Xu/